AI & Machine Learning

LLM Fundamentals

Understanding Large Language Models: theory, neural networks, and training processes that power modern AI.

🤖 Language Models

Language Model

A type of artificial intelligence that can understand, generate, and manipulate human language.

Large Language Models (LLMs)

Advanced AI models that learn from enormous text datasets, developing the ability to understand context and produce natural, human-quality language responses.

What distinguishes LLMs from earlier language models? Their breakthrough lies in two fundamental advances:

KEY DIFFERENTIATORS

Scale

LLMs are trained on gigantic datasets, often spanning billions of words from every corner of the internet. But it's not just about the size of the dataset – LLMs themselves are massive, containing billions of parameters.

Capabilities

This massive scale enables LLMs to develop nuanced language comprehension and extensive knowledge across domains. They excel at sophisticated linguistic challenges that previously required specialized models or human intervention.

✨ Emergent Properties

Emergent Properties: Unexpected abilities that develop naturally from the learning process, without being directly taught or programmed.

🎵 Musician Analogy

Consider a musician who studies thousands of songs across different genres. Beyond memorizing melodies, they develop an intuitive sense of harmony, rhythm, and musical structure that lets them improvise and create entirely new compositions.

💡 The Key Insight: These emergent capabilities represent LLMs' most remarkable feature. They enable models to solve problems and perform tasks that weren't part of their original training objectives.

In essence, LLMs are the closest thing we have to artificial general intelligence.

🧠 Neural Networks: The Foundation of LLMs

Neural Network: A computing architecture made up of connected processing units called nodes, loosely modeled after how biological neurons communicate in the brain.

🧱 Components of a Neural Network

Nodes (Neurons)

Nodes are the fundamental computing elements that accept data, perform calculations, and transmit results to connected nodes in the network.

🏭 Assembly Line Metaphor: Think of nodes as workers in an assembly line. Each worker (node) has a specific task, and they pass their work (output) to the next worker (node) in the line.

Weights

Weights are the connections between nodes that determine the strength and importance of the input signal.

🔊 Volume Control Metaphor: Think of weights like volume controls on your phone or TV. Just as you turn up the volume for important sounds and turn down distracting noise, the network adjusts weights to amplify important information and reduce irrelevant signals.

Biases

Biases provide nodes with baseline activation levels, enabling them to respond appropriately even when receiving weak or no input signals.

🌡️ Thermostat Metaphor: Think of biases like a thermostat's default temperature setting. Even when no one is home, the thermostat maintains a baseline temperature. Similarly, biases give nodes a starting point, ensuring they can produce useful output even with minimal input.

🎯 Training a Neural Network

TRAINING COMPONENTS

Predicted Output

What the neural network actually produces as output, based on its current configuration of weights and biases.

Desired Output

Also known as the target or ground truth, is the correct result that the neural network should ideally produce for a given input.

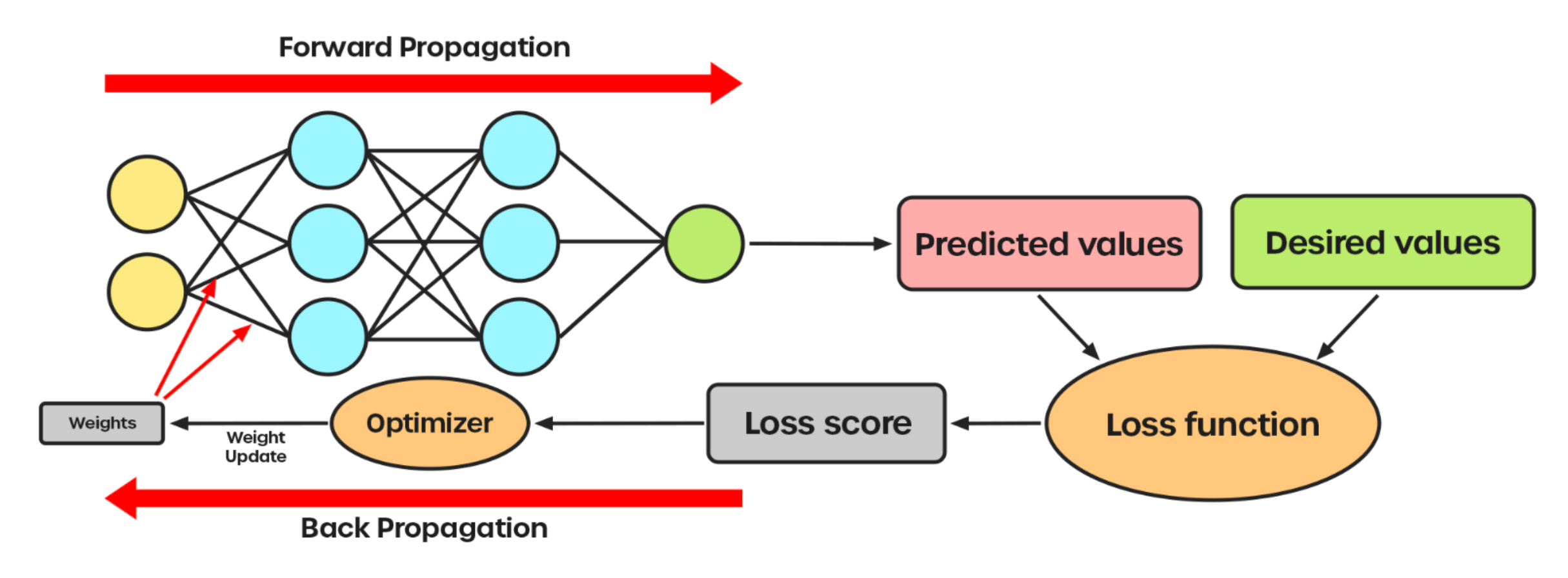

Complete training process showing forward propagation, loss calculation, and backward propagation with weight updates

🔄 The Training Process

Training: The iterative process of refining a neural network's performance by modifying its weights and biases through exposure to example data and feedback.

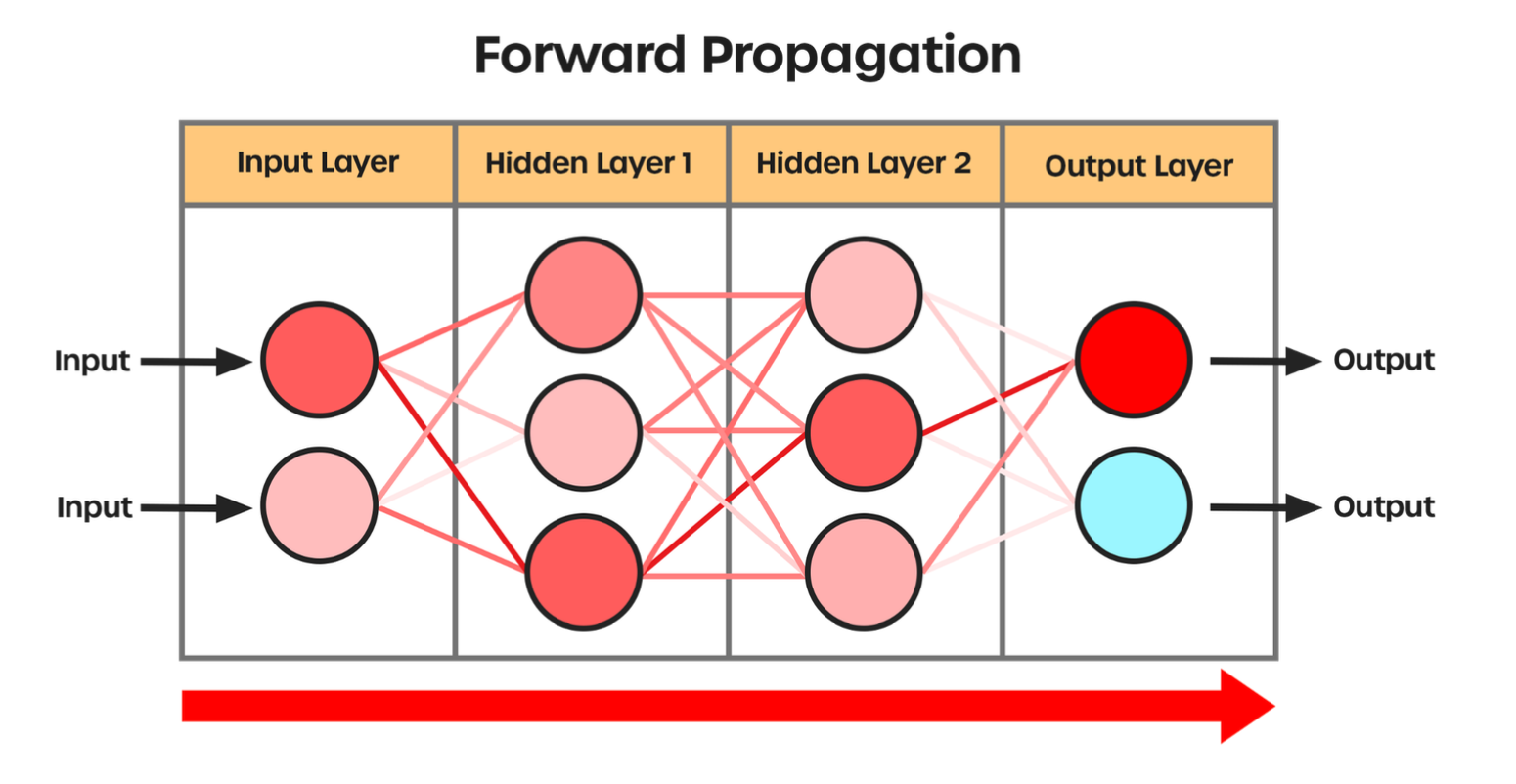

Forward Propagation

Input data travels through the network's layers sequentially, with each layer transforming the information until reaching the final output layer that produces the network's prediction.

Data flows forward through the network layers to generate predictions

💡 Think of it as: Information moving through the network like ingredients through a recipe's steps, where each layer adds its own transformation to create the final result.

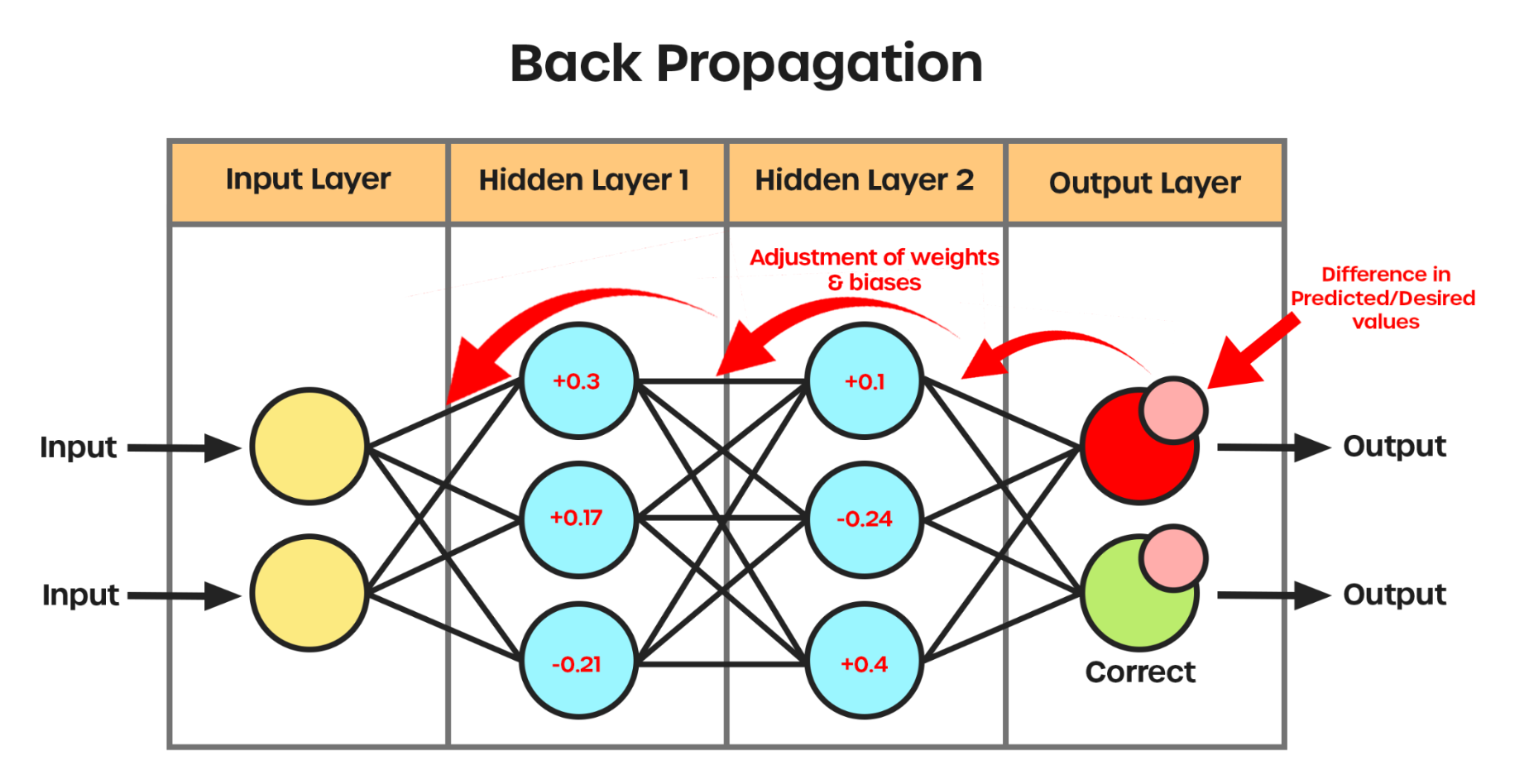

Backpropagation

After comparing the network's prediction with the correct answer, this process works backward through the layers to update weights and biases, reducing future prediction errors.

Error signals propagate backward to adjust weights and biases throughout the network

💡 Think of it as: A detective retracing steps backward from a crime scene, identifying which clues (connections) were helpful or misleading, then adjusting investigative priorities accordingly.

🔁 The Training Loop

This cycle of prediction and correction repeats thousands or millions of times, with each iteration fine-tuning the network's parameters toward better accuracy.

💡 Key Insight: Picture learning to play basketball – each shot attempt (forward propagation) shows your current aim, while analyzing misses (backpropagation) helps you adjust your technique until your accuracy improves.

Remember: The true power of LLMs lies in their emergent properties—capabilities that arise naturally from their massive scale and training, allowing them to perform tasks they were never explicitly programmed for. This is what sets LLMs apart and makes them the closest thing we have to artificial general intelligence.